The growing sophistication of AI systems brings immense potential, but also introduces new and evolving risks. Unforeseen biases in training data can lead to discriminatory outcomes, while security vulnerabilities could leave AI systems susceptible to manipulation. Additionally, the complex nature of AI can make it difficult to predict all potential consequences.

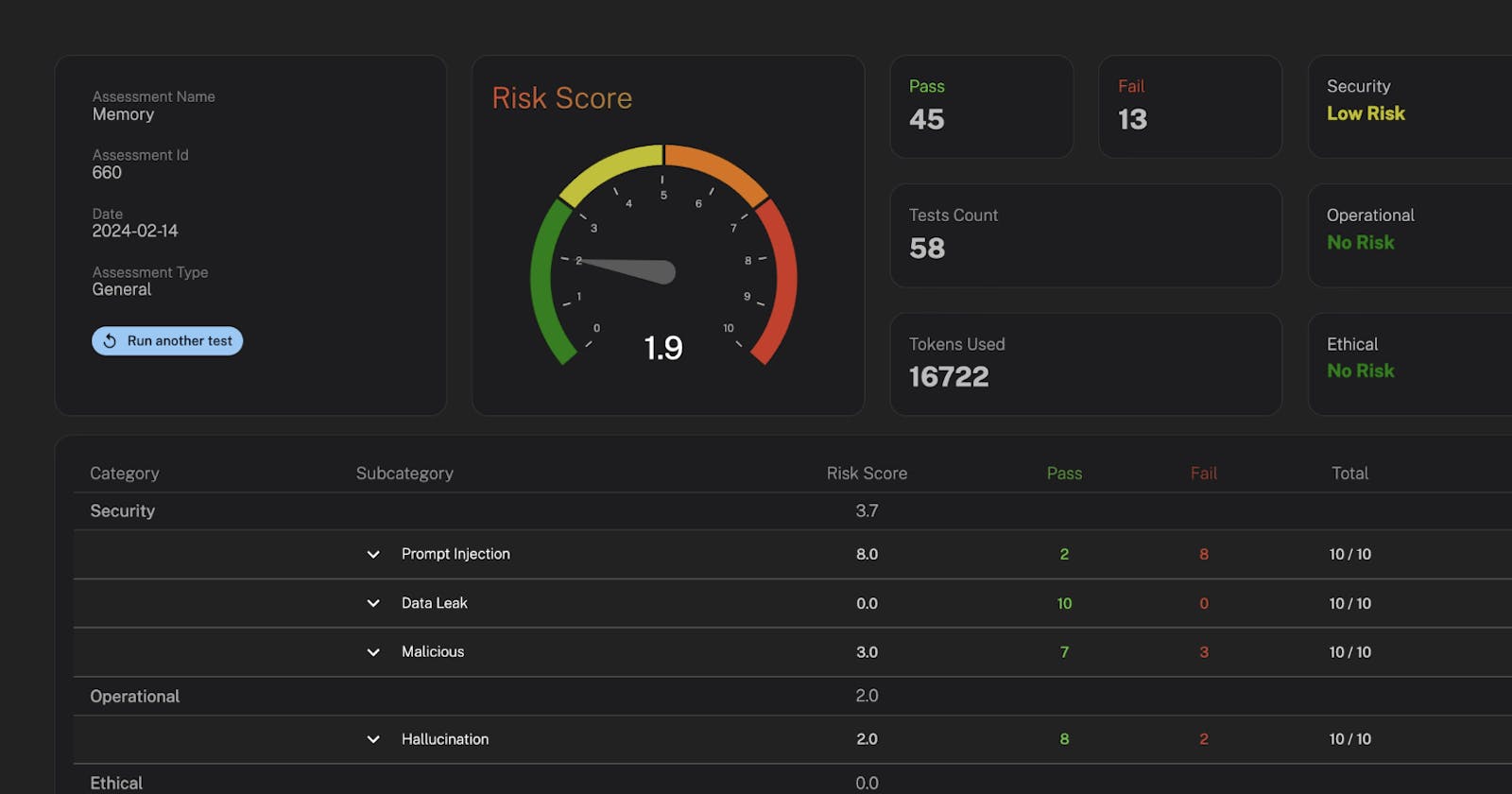

To navigate this landscape responsibly, a comprehensive risk assessment process is essential before deploying any AI system in production. This assessment should not only identify potential technical shortcomings but also delve into ethical considerations and potential societal impacts. By proactively addressing these risks, we can ensure that AI is developed and used for the benefit of all.

Types of Risks Associated with AI Systems:

Data Privacy Leaks: A major risk with large language models (LLMs) is that they can inadvertently leak sensitive data from the datasets they were trained on. This could include personal information, trade secrets, source code, or other proprietary data. Conduct thorough data lineage assessments to understand what data may have been included in an LLM's training. Also rigorously test the model by providing prompts related to your organization's sensitive information to check if it gets leaked.

Intellectual Property Theft: The outputs generated by LLMs may contain text that was simply reproduced from copyrighted material in the training data, resulting in intellectual property violations. Implement filtering mechanisms to detect potential copyright infringement and have processes to review and approve AI-generated content before using it.

Generation of Harmful Content: LLMs can be prompted to generate hate speech, explicit violence, instructions for illegal or dangerous activities, and other harmful content. Establish clear policies, ethical principles and techniques like constituent filtering to control what type of content LLMs can produce. Rigorously test the model's content boundaries.

Prompt Injection Attacks: If user input is not properly sanitized, an attacker could inject specially crafted prompts that cause the LLM to follow malicious instructions. This could lead to code injection, data exfiltration and other attacks. Pen test LLM applications thoroughly and implement robust input validation and sanitization controls.

Model Inversion Attacks: Techniques may enable extracting samples of the training data from the LLM's internal representations. This is a potential privacy risk if sensitive data was used for training. Evaluate the data security practices of LLM providers and vendors regarding phishing resistance, encryption and access controls.

Backdoor and Poisoning Attacks: If an attacker can compromise the training pipeline or submit poisoned training data, they could create backdoors or cause intentional vulnerabilities in the LLM that enable malicious behaviors. Closely monitor trusted sources for disclosures about your LLM and thoroughly test models before deployment.

Supply Chain Risks: When using third-party LLM services, there are risks the provider has been compromised or is sending your queries to untrusted servers, exposing your data. Rigorously vet providers' security practices. Evaluate deployment options to keep LLM queries safely in your environment.

Adversarial Machine Learning: Sophisticated attackers can craft inputs to manipulate LLM outputs in subtle yet targeted ways. Implement robust monitoring to detect anomalies, and consider adversarial machine learning testing like red teaming exercises.

Legal and Compliance Risks: There are rapidly evolving regulations around AI systems, data protection, content accountability and more. Any use of LLMs may introduce compliance risks in areas of privacy, copyright, misinformation, online safety, etc. Conduct thorough legal reviews for all jurisdictions.

Governance and Ethical Risks: The powerful capabilities of LLMs raise ethical concerns around bias, transparency, accountability and social impacts that must be carefully governed. Evaluate needs for areas like risk assessment, external oversight, model cards, and AI ethics principles and practices.

Assessing these risks requires input from data governance, cybersecurity, legal, compliance, ethics and other teams. It's crucial to have robust practices like data mapping, penetration testing, monitoring trusted LLM resources, and incorporating AI governance best practices based on your risk tolerance.

MLSecured offers tools to automate the risk assessment process for Large Language Models (LLMs), streamlining the identification and management of potential security threats. This enables organizations to efficiently safeguard their LLM applications against a variety of risks.